Scaling a web application is an essential aspect of managing its growth, ensuring its availability, and maintaining its performance. Kubernetes is a powerful container orchestration platform that simplifies scaling and managing containerized applications. One of the key features of Kubernetes is the Horizontal Pod Autoscaler (HPA), which allows automatic scaling of pods based on resource usage.

In this blog, we will discuss how we scaled ReBid Services on Kubernetes using HPA and explain the terminologies involved.

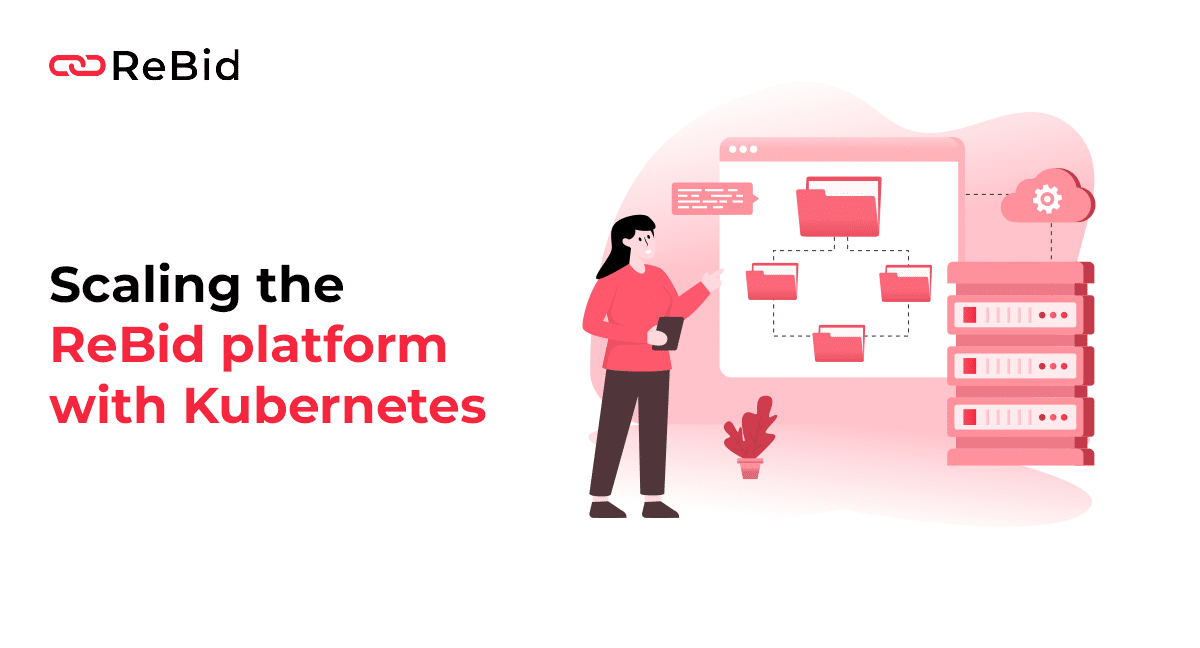

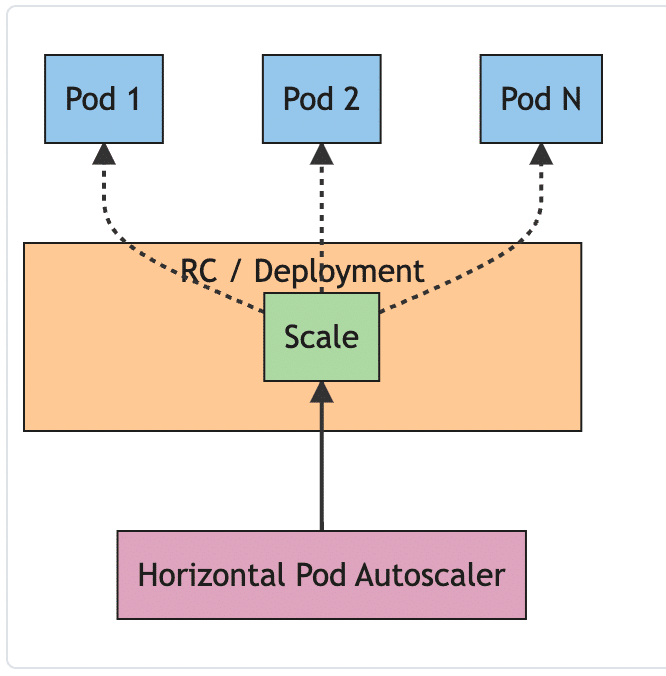

The figure represents HorizontalPodAutoscaler controlling the scale of a Deployment

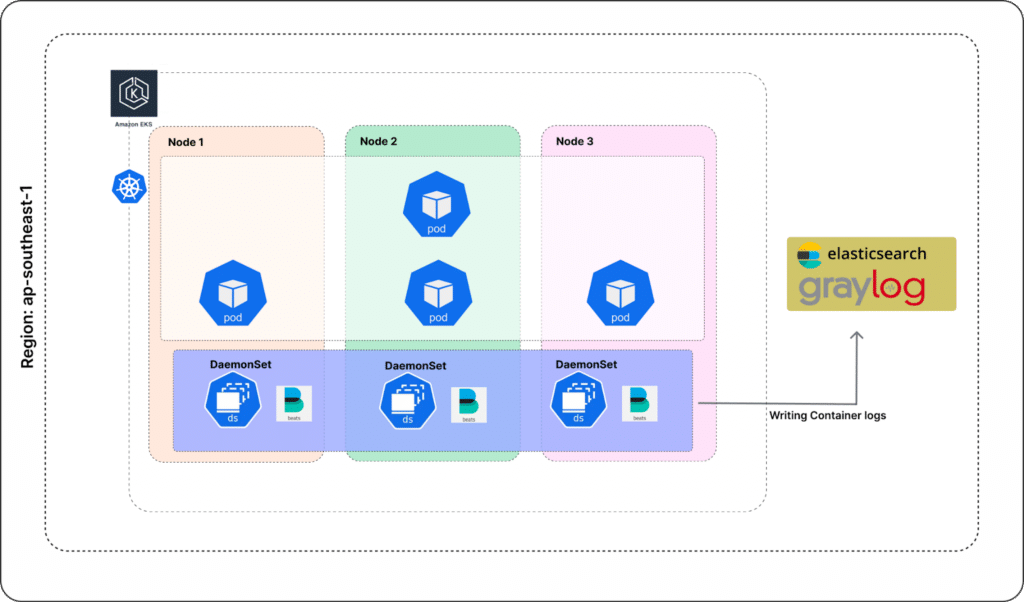

ReBid, a MADTech Intelligence Platform, has been scaling their business as they grow. Their mission is to bring transparency to the advertising industry by providing a better way for advertisers to reach consumers. Image shown below shows the rough architecture of ReBid backend application services. It’s simple pods on the node architecture. All the backend applications are containerized using Docker and deployed on Kubernetes. The application consists of multiple services, including the web server, database, and backend services. The application is designed to handle a large number of users, and as the user base grows, the application needs to scale dynamically to meet the demand.

Horizontal Pod Autoscaler (HPA) is a Kubernetes feature that automatically scales the number of pods in a deployment based on CPU usage, memory usage, or custom metrics. The HPA continuously monitors the resource utilization of the pods and adjusts the number of replicas to match the desired resource utilization level. HPA is an essential feature for applications that experience variable load or spikes in traffic.

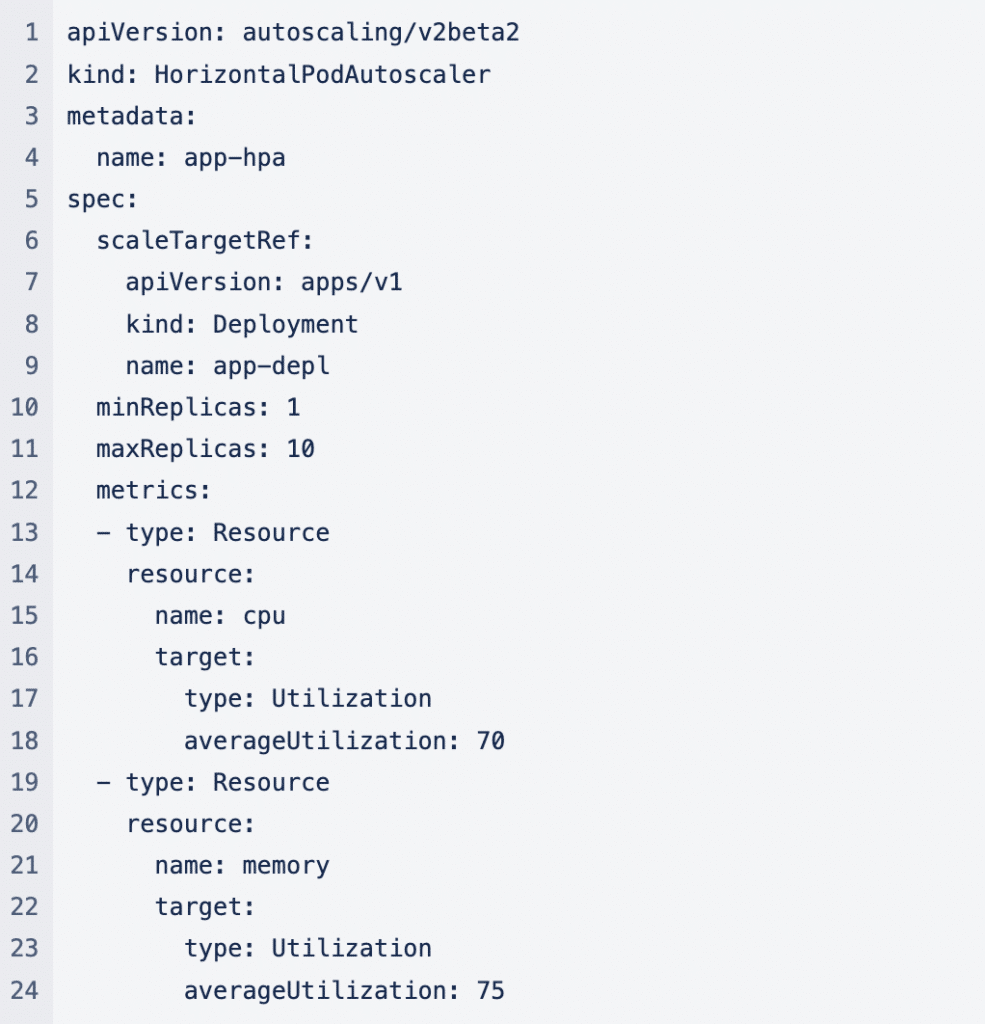

To scale ReBid Services using HPA, we first set up a Kubernetes cluster with the necessary resources. We then created a deployment for each service in the application and set the number of replicas to a minimum of two. We then configured the HPA to monitor the CPU utilization of each pod and maintain an average utilization of 70%. In some of the applications, depending on the use case like how early/late you want to scale, threshold set to 80% also. Below is a sample yaml file to show you how it looks.

To ensure that the HPA can scale the pods, we also set resource limits and requests for each container. We set the resource requests to the minimum amount required by each container and set the resource limits to the maximum amount that each container can use. This ensures that the containers have enough resources to function correctly and that they cannot consume more resources than necessary, causing other containers to fail.

As the user load on the application increases, the HPA automatically scales the number of replicas of each service to match the demand. This ensures that the application can handle the increased load and maintain its performance. The HPA also monitors the pods’ resource utilization and adjusts the number of replicas accordingly, ensuring that the application remains within the desired resource utilization level.

In conclusion, scaling ReBid Services on Kubernetes using HPA is a straightforward process that involves setting up a Kubernetes cluster, deploying the application services, configuring the HPA, and setting resource limits and requests for each container. With HPA, the application can scale dynamically and ensure its availability and performance, making it an essential feature for any Kubernetes-based application helping us save our cost by 60% approximately.